I’m now working on the project “PlasticAI” which is aiming for detecting plastic wastes on the beach. As an experiment for that, I have trained object detection system with the custom dataset that I collected in Expedition 1 in June. The result of this experiment greatly demonstrates the power of the object detection system. Seeing is believing, I’ll show the outcomes first.

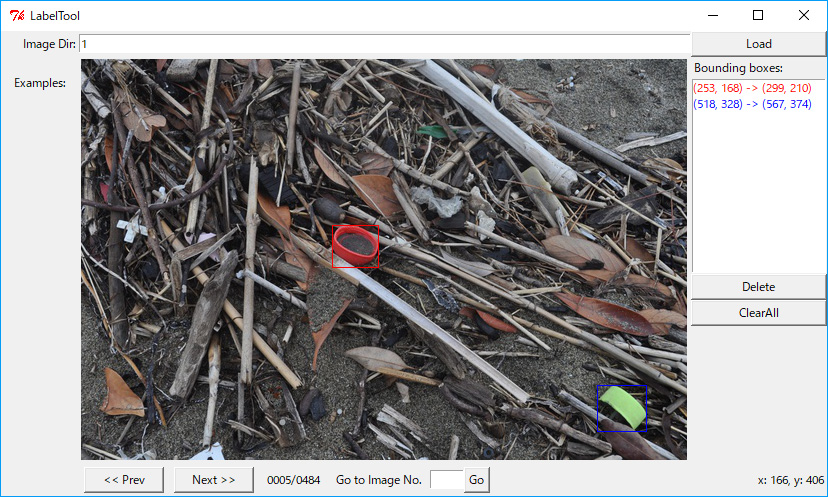

The trained model precisely predicted the bounding box of a plastic bottle cap.

Training Dataset

Up until I conducted a model training, I haven’t been sure about whether the amount of training dataset is sufficient. Because plastic wastes are very diverse in shapes and colors. But, as a result, as far as the shape of objects are similar, this amount of dataset has been proved to be enough.

In the last expedition to Makuhari Beach in June, I’ve shot a lot of images. I had no difficulty finding bottle caps on the beach. That’s sad, but I winded up with 484 picture files of bottle caps which can be a generous amount of training data for 1 class.

The demanding part of preparing training data is annotating bounding boxes on each image files. I used customised BBox-Label-Tool [1].

Just for convenience, I open-sourced the dataset on GitHub[2] so that other engineers can use it freely.

marine_plastics_dataset

https://github.com/sudamasahiko/marine_plastics_dataset

Training

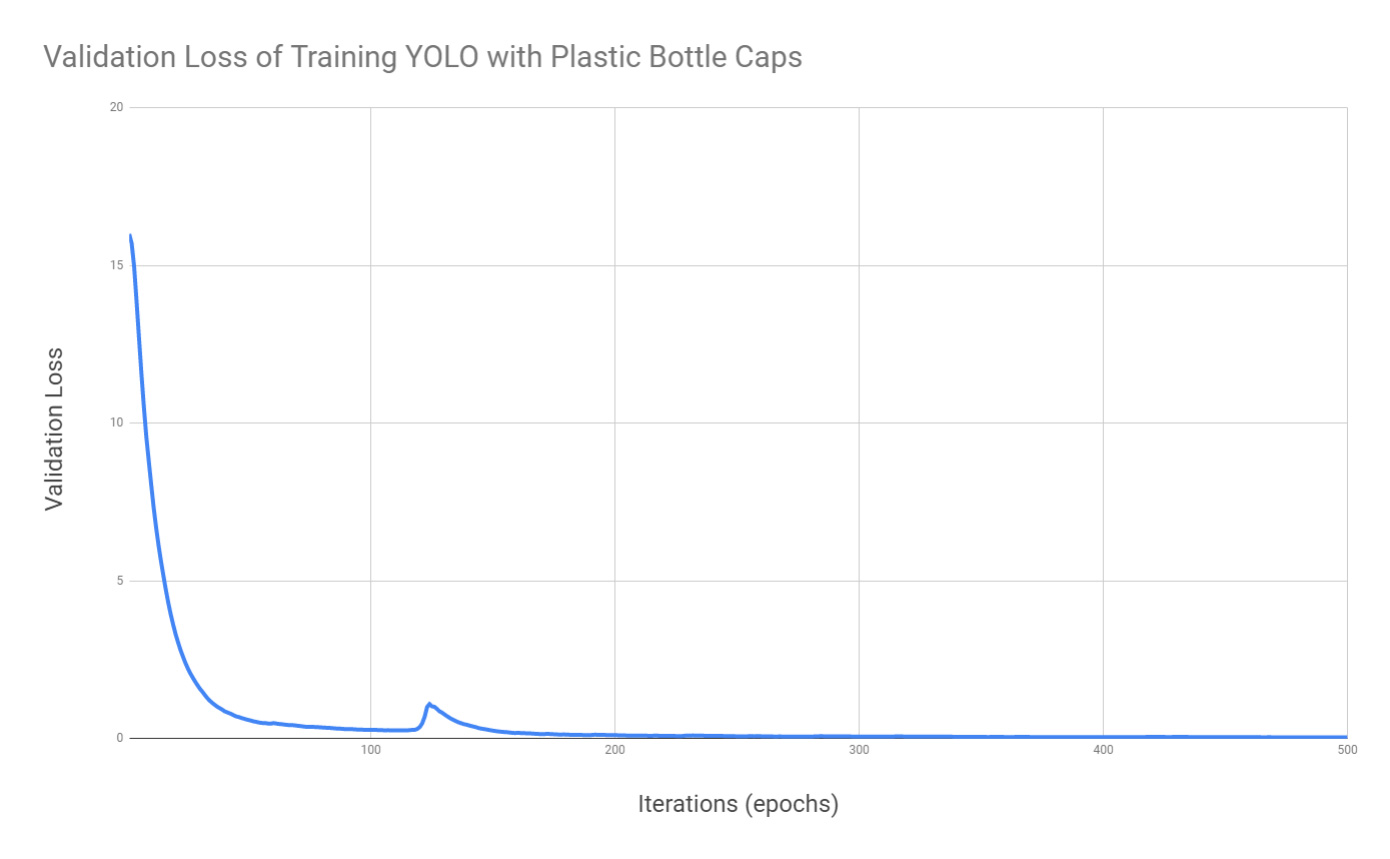

Training was done on AWS’s P2 instance, taking about 20 minutes. Over the course of the training process, the validation loss drops rapidly.

One thing that I want to note is that there is a spike in the middle of training, which probably means that the network escaped from local minima and continued learning.

The average validation loss eventually dropped to about 0.04, although this score doesn’t simply tell me that the model is good enough to perform intended detection.

Test

In the test run, I used a couple of images that I have put aside from the training dataset. It means that these images are unknown for the trained neural network. The prediction was done so quickly and I’ve got the resulted images.

It’s impressive that the predicted bounding-boxes are so precise.

Recap

The main takeaway of this experiment is that detecting plastic bottle caps just works. And it encourages further experiments.

Reference

[1] BBox-Label-Tool

https://github.com/puzzledqs/BBox-Label-Tool

[2] marine_plastics_dataset

https://github.com/sudamasahiko/marine_plastics_dataset